The rise of AI has transformed nearly every industry, including the legal industry. For HealthTech companies looking for efficient, effective legal support, AI provides an attractive option to get work done without the use of lawyers. But it comes with risks. How should companies think about the use of AI for some of their legal and compliance support?

The Arrival of AI in Legal Services

The question of whether to use AI for legal work has been settled—it is used widely by non-lawyers in companies as well as by lawyers to increase their productivity and effectiveness. The focus is now on how to use it effectively, especially in the HealthTech sector, which involves the convergence of two fundamentally different cultures:

The goal in HealthTech is often to combine these paradigms—move fast and do so in a trustworthy way. This is where the proper use of AI and legal expertise becomes critical.

Where AI Shines (and Where It Doesn't)

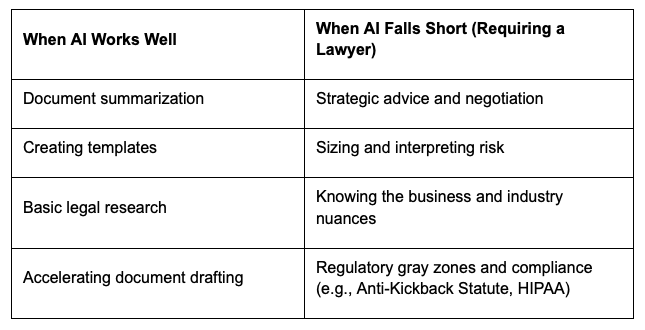

AI tools, particularly Large Language Models (LLMs), are a natural fit for legal work because the profession is language- and document-based. However, it cannot not replace what is offered by human legal professionals.

The Danger of Validation

Another significant drawback of many LLMs is their tendency to validate—agreeing with your inputs and reinforcing your bias. In legal matters, this can be dangerous, for example in areas like drafting patient consent language, where sounding compliant isn't the same as actually being compliant.

Lawyers, by contrast, are trained to be critical, to clarify, and to ask tough questions—arguing on behalf of your future self to resolve potential risks now.

Further, using public-facing AI tools for legal matters can jeopardize attorney-client privilege, making your conversation and the AI's output potentially discoverable in litigation.

A Lawyer in the Loop: A Better Way to Use AI

For any highly regulated HealthTech company, having a "lawyer in the loop" when using AI for documents and advice may be the optimal approach.

An executive should be asking their legal counsel (in-house or outside) these questions:

The Role of Lawyers is Shifting, Not Shrinking

AI efficiencies (even a modest 10-15% gain) should free up the legal team from lower-value, repetitive tasks (like NDAs) to focus on higher-value activities:

Ultimately, AI is a powerful tool, but it's not a silver bullet. Company leaders might consider pairing AI's productivity with the expertise of a lawyer to provide the strategic counsel, accountability, and risk assessment needed to build a trustworthy and successful HealthTech company.